It started as a joke. I swear.

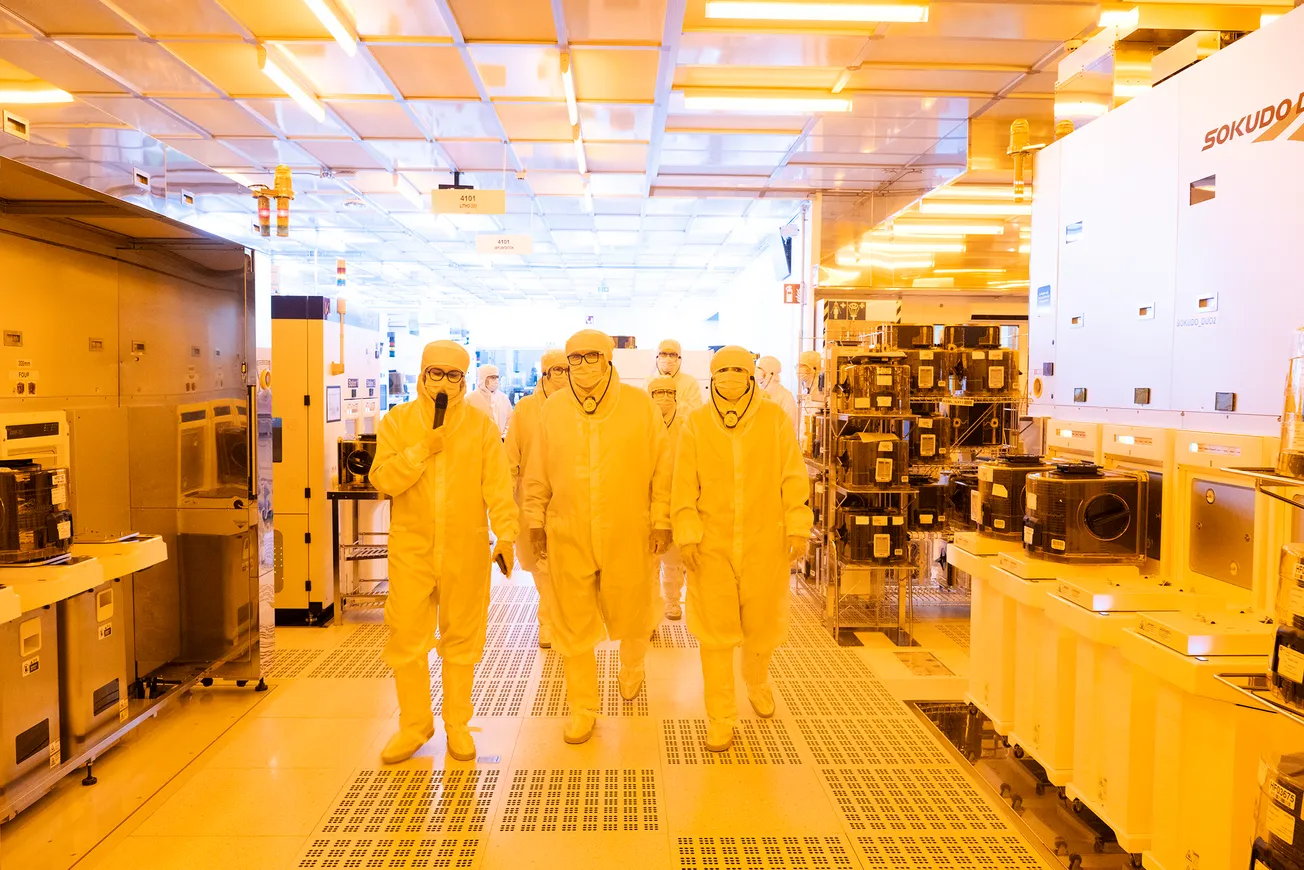

A few months ago, a certain French startup had raised a round of funding and sent out a photo of the founding team that looked unusually, well, cool. Several of us were making jokes online about how they had the vibe of an alt indie band.

And so, as one does these days, I uploaded the photo to ChatGPT and prompted, "Suggest a name for this band based on the mood and the haircuts and the clothes."

ChatGPT replied:

"Nice — this group has a very modern, understated, creative energy. The mix of casual black clothing, natural lighting, and expressive (but unfussy) hairstyles gives off a vibe that’s equal parts art school minimalism and indie confidence."

Here are some name ideas inspired by that aesthetic:

🎧 Minimalist / Dreamy

- Soft Static – calm, modern, slightly mysterious

- Hollow Light – fits the bright-white background and reflective mood

- Echo State – sleek and evocative, feels like something you’d see at a festival

- Velvet Circuit – smooth yet tech-tinged

🪩 Indie / Alternative

- The Low Frames – understated but stylish, referencing the composition of the shot

- North Arcade – nostalgic and melodic, fits a youthful, creative band

- Glass Season – poetic and introspective, like a chillwave or indie rock group

- Motive – minimalist and punchy, fits their clean aesthetic

⚡ Slightly Edgier

- Blackwave – dark palette, modern, a little mysterious

- Slow Voltage – balance between calm and energy

- The Quiet Rebellion – subtle and thoughtful but with attitude

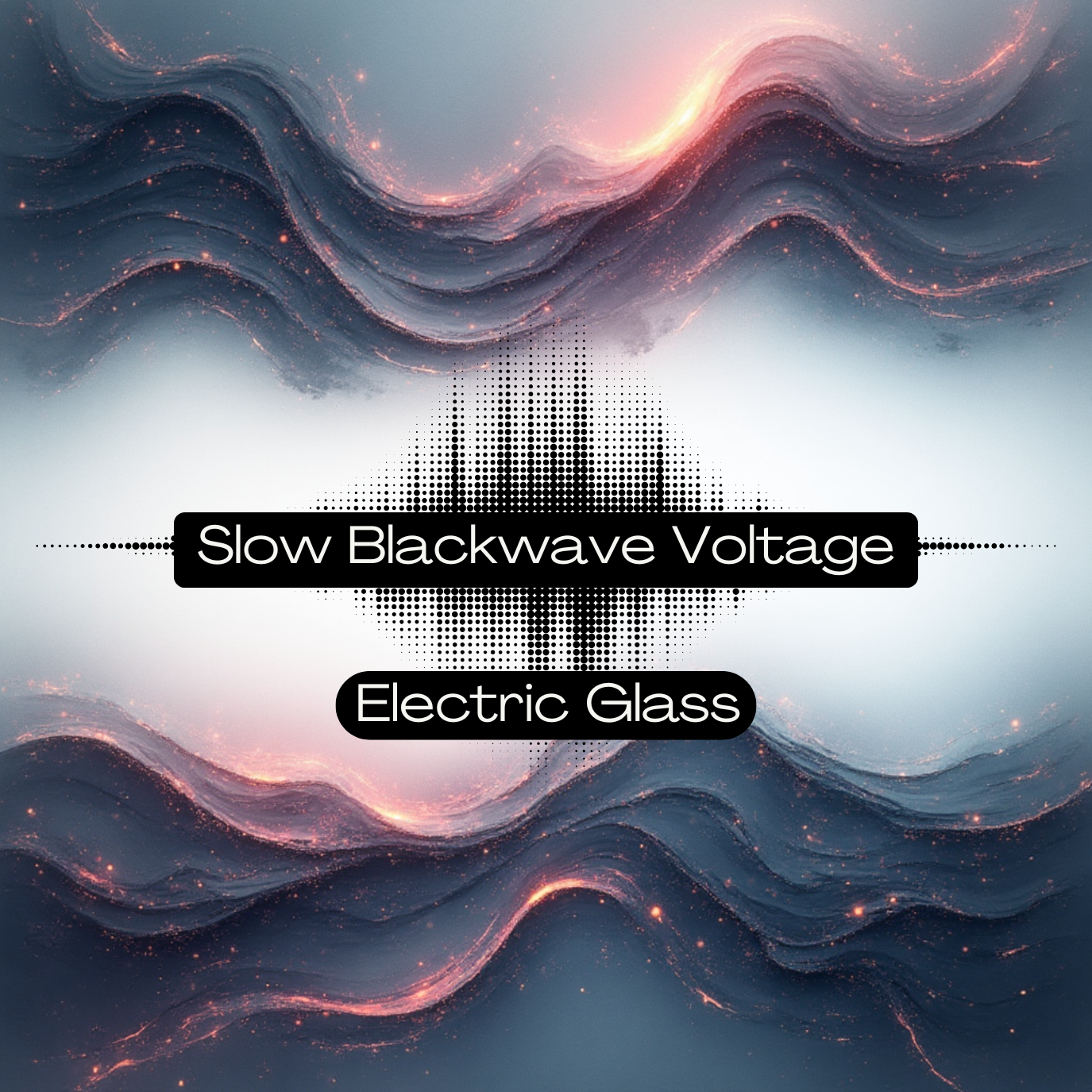

After a little bit of back and forth, ChatGPT finally suggested a name: "Slow Blackwave Voltage."

And then...it took the liberty of suggesting a name for the band's first album: "Electric Glass."

This lasted about 2 or 3 minutes, but ChatGPT was into it. Would you like me to create a press release for you? Band bios? Track listing? Magazine interviews? Song lyrics?

This is one of the underappreciated but clever design features of these tools. At a certain point, they start to prompt you.

Whoa, buddy. Slow your roll. I've got to get back to work.

But later that evening, curiosity got the better of me, so I returned to the chat, and I told it to write the lyrics to one of the tracks it had invented: "Mirrors Sleep." Which it did:

“Mirrors Sleep”

(by Slow Blackwave Voltage)

Verse 1

You said the night was made of glass,

And we were walking through ourselves.

Every window whispered back,

Every echo broke the spell.

Pre-Chorus

There’s a current under silence,

A line between the sound —

I can feel you in the static,

But you’re nowhere to be found.

Chorus

When mirrors sleep, we disappear,

Our faces fade, but stay so near.

In quiet light, we lose control,

Reflections dreaming, swallow whole.

Verse 2

Your shadow hums in grayscale tones,

The city hums a borrowed song.

We trade our names for radio noise,

And drift where we don’t belong.

Pre-Chorus

I can’t tell what’s real or breathing,

Or what the glass believes —

You look at me through broken timing,

And I fall between the screens.

Chorus

When mirrors sleep, we disappear,

Our faces fade, but stay so near.

In quiet light, we lose control,

Reflections dreaming, swallow whole.

Bridge

The voltage hums beneath the rain,

A pulse that doesn’t know your name.

We’re ghosts in rooms of silver skin,

The night begins, the night begins.

Outro

So let the mirrors sleep tonight,

Let every shadow learn to lie.

I’ll wait where silence used to be —

For you, for me, for memory.

I had already been playing with Eleven Labs, Europe's red-hot Voice AI unicorn, with some interesting results for some other projects. But I had never bothered with the "music" section because AI music...bleh...

But, what the hell...I pasted the lyrics into Eleven Labs. And then I pasted in a condensed version of the band's press release that ChatGPT had written describing their musical style. I clicked "generate," and in about 15 seconds, this is the track that Eleven Labs gave me, with no further refinement or editing:

Fast forward six weeks, and Slow Blackwave Voltage (by which I mean me) has released "Electric Glass," its debut album, which can now be streamed on Spotify, Amazon Music, Apple Music, YouTube, etc.

You might be surprised that I would spend so much time doing this. But the really surprising thing is that it doesn't take that much time.

My reason for writing about this now, however, isn't to promote my new AI band. Instead, I want to talk about two things I've taken away from this.

First: I don't think people truly grasp how far audio AI has advanced. People hear "AI," and they think "slop." This is not slop. You might not like it. But the quality is pretty astonishing. Which is why it is becoming one of the hottest sub-sectors for AI startups and investors.

Second: We haven't really grappled with the implications of how transformative Voice AI will be, artistically and economically. France's Deezer reported last November that that it 34% of all audio tracks it receives every day (30,000) were fully AI-generated. That was up from 10% in January. The company conducted an additional study in which 97% of the subjects could not tell AI tracks from human ones. Just the music portion of it is an ethical and legal minefield that makes rap sampling and Napster look like kiddie stuff.

This is way beyond that.

The Quiet Revolution

Natural language processing has been around for decades, but it's also been a pretty static, boring business. Dragon NaturallySpeaking was the dominant text-to-speech software forever. By the time the early versions of Otter.ai were released in 2018, those AI transcriptions felt like a quantum leap into the future.

But as these things tend to happen, what feels like glacial progress on the surface is really our inability to appreciate a wide range of factors moving rapidly, but almost imperceptibly, until they align and then, whoosh, the conditions become just right. A wide range of technical issues, including speech recognition, text-based reasoning, and speech synthesis, were stitched together with improved latency and improved architecture to create better performance and reasonable economics.

Blah, blah, blah.

The result is that the trendy new thing to talk about is: "Voice Is The New Interface."

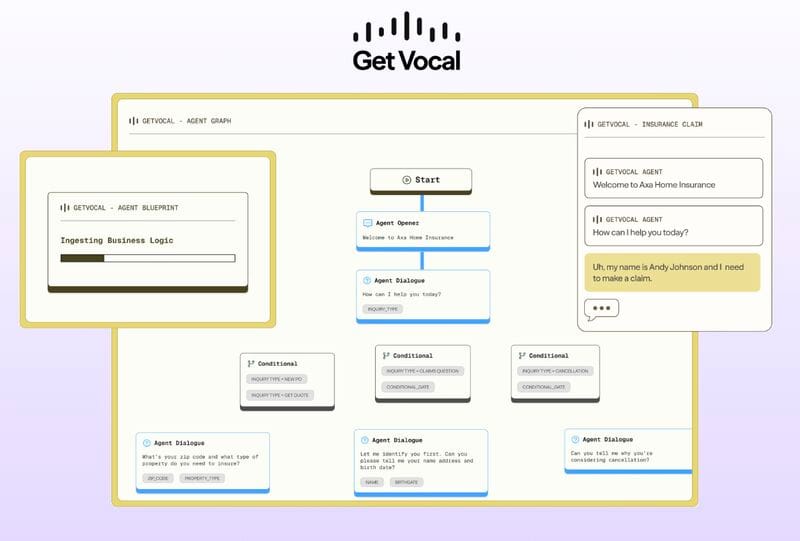

When I interviewed GetVocal CEO and Co-Founder Roy Moussa last November after the Paris-based company raised a $26M Series A for its agentic AI voice system, he described the shift to voice as instinctive.

“Voice, I would say it's the oldest interface, right?” he said. “When we were cavemen, we passed on stories by mouth. Now we just did a loop around and went back to the richest modality we have in explaining our thoughts.”

Now, teens are talking to ChatGPT. People (including me) are installing Wispr Flow on their devices to speak rapidly for ideas, emails, dictation, and even coding. But while consumer has been interesting, there is now a whole ecosystem of infrastructure players and enterprise voice AI platforms for use cases like call centers, health care, industry, and many more. There is a good chance you've been called by an AI telemarketer and didn't know it.

VCs are indeed hip to it. Just this week, Deepgram, a US-based real-time API platform for the Voice AI economy, raised a $130 million Series C round at a $1.3 billion valuation.

In Europe, the big star is Eleven Labs. The company just announced it had zipped past $330 million in annual recurring revenue (ARR). The company was founded in 2022 and only launched its first product in 2023.

France is now seeing the development of its own Voice AI ecosystem.

Late last year, Gradium made a big splash when the Paris startup that was spun out of research lab Kyutai emerged from stealth with a $60M seed round to become the global foundation layer for real-time voice interactions.

In 2025, other France Voice AI funding rounds included:

- January: Kraaft raised €13.0M for its platform that reinvents interfaces in factories through voice recognition and natural language processing (NLP). Investors: Brick & Mortar Ventures, Stride.VC, Chalfen Ventures, Dawn Capital, OSS Ventures.

- January: Tuito raised €1.0M to provide design and development services in enhanced tactile and voice command user experiences. Investors: N1, Purple Ventures.

- April: Pyannote raised €8.1M for its solution for conversational speech and voice recognition. Investors: Kima Ventures, Serena, Crane Venture Partners, Julien Chaumond, Pareto Holdings, Motier Ventures.

- July: E-Sensia raised €1.5 for its platform that provides voice-based automated assistance services to healthcare businesses. Investors: Business Angels (Sylvain Gariel, Jean-Baptiste Kempf, Jeremy Blackwell).

- July: Diago raised €1.0M for its intelligent voice assistant for automotive distribution professionals. Investors: Groupement des Concessionnaires Automobiles Peugeot.

- November: Beside announced it had raised a round of $32M for its AI-powered telephony & messaging assistant for small businesses that captures inbound calls and texts and turns them into actions (bookings, follow-ups, notes, scheduling) by rebuilding the full telephony stack with AI for the “real economy.”

Am I Going To Hell?

Of course, if you're reading...or listening...to any of this and your reaction is...ick...gross...make it stop...

...you are not alone.

The reality of these tools is that they are largely trained on existing content. Eventually, synthetic and proprietary data will make up greater proportions. But for general-purpose LLMs and consumer-facing applications, it's whatever exists out in the universe that can be scooped up.

Just this month, Stanford and Yale researchers demonstrated that jailbroken Claude 3.7 Sonnet "outputs entire books near-verbatim (e.g., nv-recall=95.8%)."

There are many legal, ethical, and moral quandaries here. Not to mention all sorts of potential Black Mirror-type weirdness. But let's circle back to the music part of this conversation.

As I went down the rabbit hole, I found that ultimately, Eleven Labs was pretty limited when it came to music. So I signed up for Suno, a U.S.-based music generation app.

Suno raised $250 million in November at a $2.45 billion valuation. It was started two years ago and has 100 million users. It was also being sued by Warner Music Group, Universal Music Group, and Sony Music Group for using their music to train its service. Sounds like all-out war except...just a couple of weeks later, Warner settled with Suno and agreed to license its content. Universal settled with a different music generation app, Udio.

And so, the first damn has broken.

From there, the desire to hold back this rising tide is mixed. In the case of Suno, currently, I could generate songs at a certain paid tier, download them, and own the rights. Under the new deal, later this year, it will get more expensive and more complicated for Suno users.

Once you create music, there is a challenge of distributing it. One can't simply click a button and upload a track to Spotify. You have to go through official music distribution partners. And those partners have a wide range of policies when it comes to AI-generated content.

For instance, TuneCore, a subsidiary of France's Believe, unsurprisingly has a strict no AI-generated policy. Except...the artist Grimes created a tool called GrimesAI that allows people to create content using a simulation of her voice. So, TuneCore is kinda sorta okay with songs that use GrimesAI.

I used a service called Distrokid which has a more "don't ask, don't tell" policy when it comes to AI-generated content. Once I uploaded all of the tracks and set a release date, there wasn't much pushback from any of the big platforms. All of them have approved official music channels for Slow Blackwave Voltage.

The notable exception is Deezer, which seems to be waging a lonely battle here against music's AI future. As the company noted in its press release last November, it is the only major platform labeling AI content.

“The survey results clearly show that people care about music and want to know if they’re listening to AI or human made tracks or not," CEO Alexis Lanternier said in a statement. "There’s also no doubt that there are concerns about how AI-generated music will affect the livelihood of artists, music creation and that AI companies shouldn’t be allowed to train their models on copyrighted material. It’s reassuring to see that we have broad support for our efforts.”

When people do know it's AI music, they definitely get the creeps. But what happens when they don't know? Last year, the AI band "The Velvet Sundown" got one million monthly Spotify listeners. It seems like only a matter of time before the Grammys create a category for AI artists. Here's another fun thought experiment: Can you imagine a world where everyone just generates their own music, and no two people on the planet are listening to the same song...ever? Why bother looking for new music when I can just create new music that exactly suits my taste?

As a headline in Paste magazine put it late last year: "The only thing worse than bad AI music is good AI music: What happens when the computer-generated slop is tolerable?"

Indeed, this is not ChatGPT creating images of kids with six fingers and missing a leg, the standard stuff that comes to mind when you hear "AI slop."

Last week, riffing on another bit of randomness, ChatGPT had thrown the phrase "Les Echos on espresso" at me. So while taking the Paris Metro home, I dropped that into ChatGPT, and prompted: "Write the lyrics for a song called 'Les Échos on Espresso' that has a playful, caffeinated, dreamy, pop vibe." Then I took those lyrics and put them into Suno with the same musical prompt.

Within three minutes, start to finish, this is the song AI had created. If you heard this on Spotify, the radio, or in the gym, would you honestly know it was AI? And would it matter? Should it matter?

Welcome to the rabbit hole. 🎵